This post is part 1 about Greenfield and was originally posted on Twitter (2021).

It basically started with a discussion in #wayland on irc where it was suggested that one should use (s)rtp for real time video stream. The browser lacking such things, only offers WebRTC so first thing was to check if that could be utilized.

Turns out it's nearly impossible to attach meta data to a video frame coming from WebRTC, something you really need in wayland protocol as you need to link a buffer with a commit request. (Ironically only MS err edge? browser supported this at the time).

So exit WebRTC video, hello GStreamer. GStreamer was surprisingly easy to implement as you can basically setup an entire pipeline using a string, input file and output file. WebRTC was still used for data transport using a data-channel.

Note at this point, there was till zero Wayland code. Next step was to see if we can decode a video frame, and control the exact moment it is presented on screen. HTML5 video offers something called MSE which allows you to feed it chunks of video.

Great, all we need to do is feed it single frame chunks and we should be set. This works great except that it doesn't. MSE doesn't tell you when it's showing the frame, it's also expects extra (useless overhead for us) mp4 container data and playback time. More complexity we don't want or need. Exit MSE.

Looks like we need to handle the video frame decoding ourself. After some trial and error journey into H264 decoders in ASM.js and WASM there was finally something that ticks all checkboxes. TinyH264.

Great. All functionality was here. But will it be fast enough? Luckily the whole pipeline could do 1080p@30fps. Good enough for now. Time to port the the wayland protocol to JavaScript!

If you want to support wayland compositor in your browser, you have to:

- make sure you can handle native file descriptors over network

- make sure you can deal with native wayland server protocol libraries (drm & shm implementations)... over network

- deal with slow clients without blocking other clients

- native file descriptors in the protocol are represented as URLs (strings)

- native protocol libraries require a proxy compositor and a libwayland fork

- slow clients are handled by an async compositor implementation.

Phoronix.

Great success! But the work was far from over. Things were still too slow, too complex not really usable. 😿

Turns out writing an async wayland compositor is really hard. Eventually WebRTC was completely dropped and replaced by WebSockets, each application surface is now a WebGL texture instead of it's own Html5 canvas (canvas doesn't do double buffering) and more.

Meanwhile another idea was brewing. Since we now have a compositor in the browser that talks vanilla wayland. We don't *have* to run the apps remotely. They can run directly in your browser as well using web workers!

A poc was implemented and promptly featured again on Phoronix.

Building on this idea, there was one big blocker. There are literally no good widget libraries that allow you to render directly to a Html5 SharedMemoryBuffer let alone to a WebGL canvas. So to prove this idea was feasible, I experimented if I could get something going...

First there was the need for a good drawing library. Since there are none that fitted al the needs, I resorted to compiling Skia to wasm. This was a challenge, not having any C++ experience but got something working eventually... and Google noticed!

Turns out they were wanting to do the same for their upcoming port of Flutter to the browser (although they never mentioned that at the time but 1+1=...). So pretty cool, meant that I didn't have to put my spare time in it anymore and could eventually start with the next phase.

Write a custom React renderer that outputs to an offscreen webgl canvas, which talks wayland protocol to a compositor in your browser, while running in a web worker. No biggy.

Writing a custom react renderer is hard. Not because it's hard (it's quite easy actually) but because there is basically no documentation about it. It doesn't help that it has 2 render modes and all documentation is about the the first one... I wanted the second one.

Eventually something was working and the basis for a browser widget toolkit that can output to offscreen (and onscreen) webgl is there.

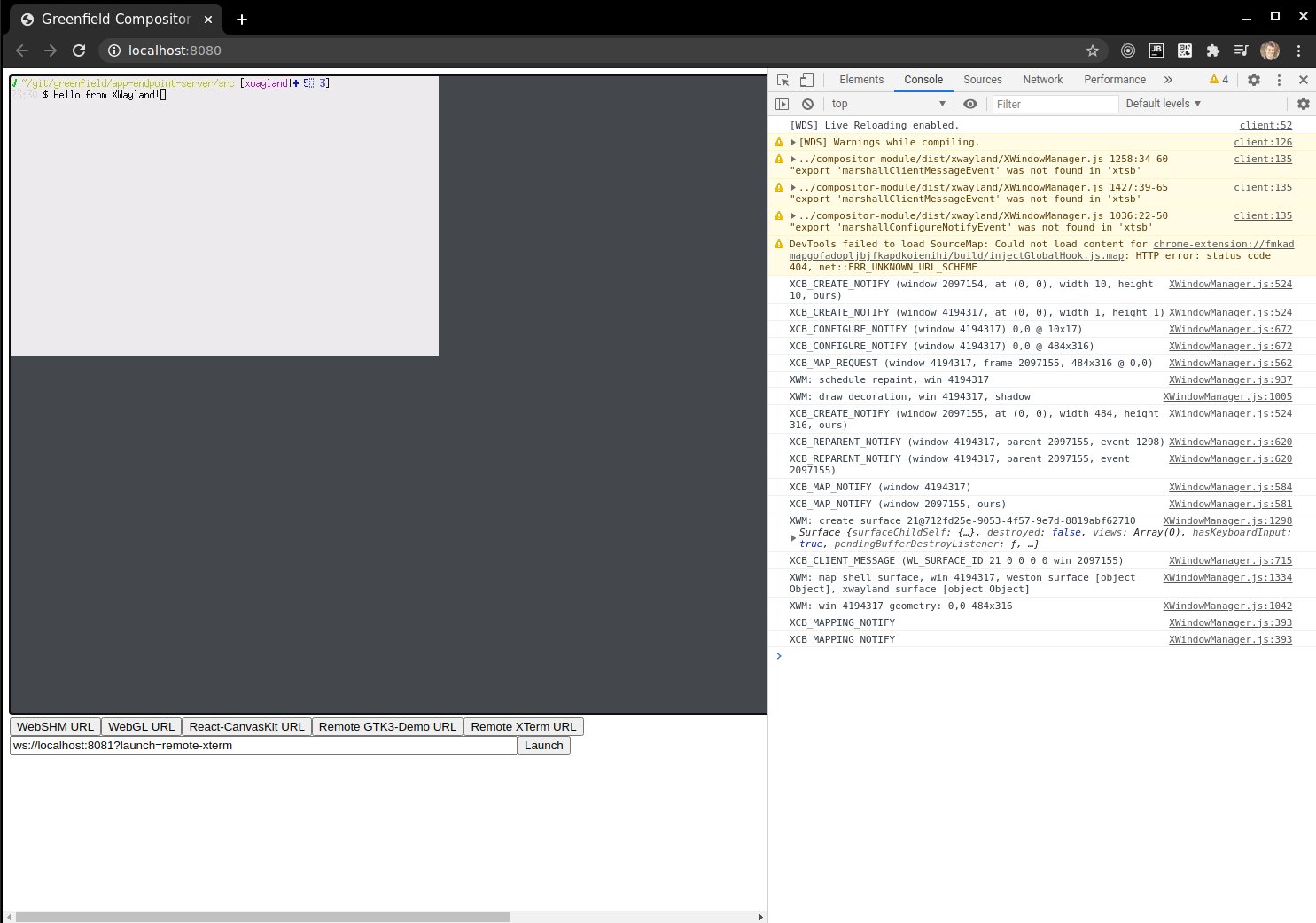

Great. So now the pure browser wayland app use-case has been proven. But there is still other things lacking. Not all remote Linux apps run on wayland, in fact most still use X11. So if we want to support all linux apps, we need to support xwayland... in the browser.

They way xwayland works, is that the X11 server presents itself as a wayland client, but still requires the wayland compositor to act as an X11 window manager. In our case, the compositor is running in the browser. So that means the browser has to function as an X11 client.

Fun fact: there are no libraries that allow a browser/webpage to act as an X11 client. So... let's implement it ourself! Looking at xcb and how xpyb works, xtsb (x typescript bindings) was finally born,

Implementing the X window manager was quite a challenge but luckily Weston the Wayland reference compositor had lit what would otherwise be a dark path, and I could basically rewrite their C code to TypeScript and eventually got something working!

Btw up until now all development was done during my spare time while working full-time. I also had the privilege of welcoming 2 wonderful kids into the world. So if you tell me you don't have time for x or y, I just think you must have a very healthy sleep schedule.

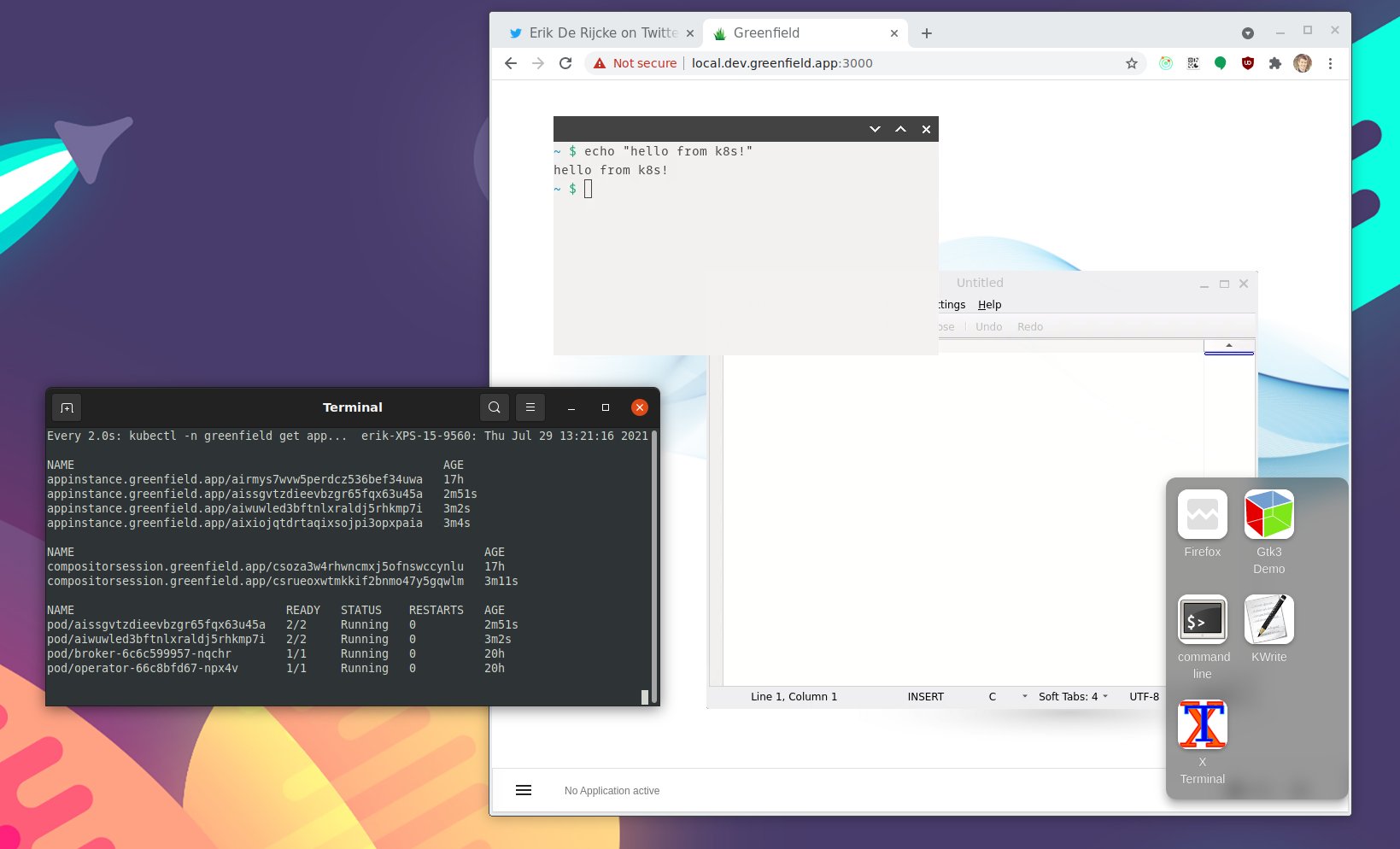

Back to kubernetes. I noticed people had trouble setting everything up. So with a little help from a friend we started implementing a custom kubernetes operator that can manage browser compositor sessions and the applications they display.

A wayland compositor proxy runs as a sidecar inside the pod that hosts the desktop application container. This means that you can distribute all your desktop apps over your entire k8s cluster and finely tune the resources, filesystem and caps the app has access too.

Part 2

Turns out writing an async wayland compositor is really hard. Eventually WebRTC was completely dropped and replaced by WebSockets, each application surface is now a WebGL texture instead of it's own Html5 canvas (canvas doesn't do double buffering) and more.

Meanwhile another idea was brewing. Since we now have a compositor in the browser that talks vanilla wayland. We don't *have* to run the apps remotely. They can run directly in your browser as well using web workers!

A poc was implemented and promptly featured again on Phoronix.

Building on this idea, there was one big blocker. There are literally no good widget libraries that allow you to render directly to a Html5 SharedMemoryBuffer let alone to a WebGL canvas. So to prove this idea was feasible, I experimented if I could get something going...

First there was the need for a good drawing library. Since there are none that fitted al the needs, I resorted to compiling Skia to wasm. This was a challenge, not having any C++ experience but got something working eventually... and Google noticed!

Turns out they were wanting to do the same for their upcoming port of Flutter to the browser (although they never mentioned that at the time but 1+1=...). So pretty cool, meant that I didn't have to put my spare time in it anymore and could eventually start with the next phase.

Write a custom React renderer that outputs to an offscreen webgl canvas, which talks wayland protocol to a compositor in your browser, while running in a web worker. No biggy.

Writing a custom react renderer is hard. Not because it's hard (it's quite easy actually) but because there is basically no documentation about it. It doesn't help that it has 2 render modes and all documentation is about the the first one... I wanted the second one.

Eventually something was working and the basis for a browser widget toolkit that can output to offscreen (and onscreen) webgl is there.

Great. So now the pure browser wayland app use-case has been proven. But there is still other things lacking. Not all remote Linux apps run on wayland, in fact most still use X11. So if we want to support all linux apps, we need to support xwayland... in the browser.

They way xwayland works, is that the X11 server presents itself as a wayland client, but still requires the wayland compositor to act as an X11 window manager. In our case, the compositor is running in the browser. So that means the browser has to function as an X11 client.

Fun fact: there are no libraries that allow a browser/webpage to act as an X11 client. So... let's implement it ourself! Looking at xcb and how xpyb works, xtsb (x typescript bindings) was finally born,

Implementing the X window manager was quite a challenge but luckily Weston the Wayland reference compositor had lit what would otherwise be a dark path, and I could basically rewrite their C code to TypeScript and eventually got something working!

Adding POC Xwayland support was nice (and is still not further implemented for now), but there was still another fundamental issue that was left unaddressed. The whole setup and way of running applications was extremely clumsy and messy... enter kubernetes

Btw up until now all development was done during my spare time while working full-time. I also had the privilege of welcoming 2 wonderful kids into the world. So if you tell me you don't have time for x or y, I just think you must have a very healthy sleep schedule.

Back to kubernetes. I noticed people had trouble setting everything up. So with a little help from a friend we started implementing a custom kubernetes operator that can manage browser compositor sessions and the applications they display.

A wayland compositor proxy runs as a sidecar inside the pod that hosts the desktop application container. This means that you can distribute all your desktop apps over your entire k8s cluster and finely tune the resources, filesystem and caps the app has access too.

No comments:

Post a Comment